OpenVINO: Democratizing High-Performance AI Inference on Any Hardware

The Next Wave of AI: Bringing High-Performance Inference to the Edge

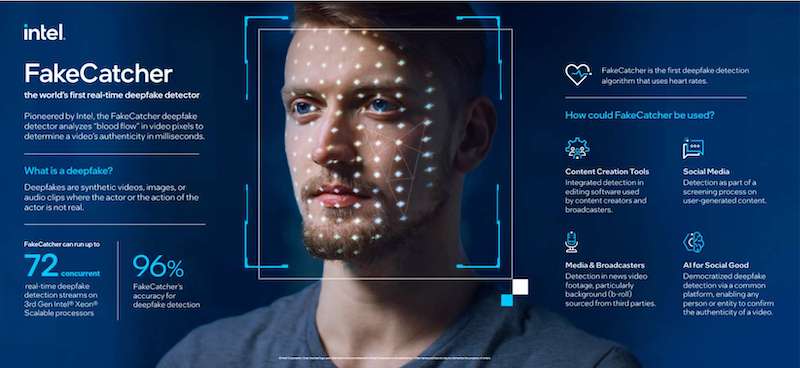

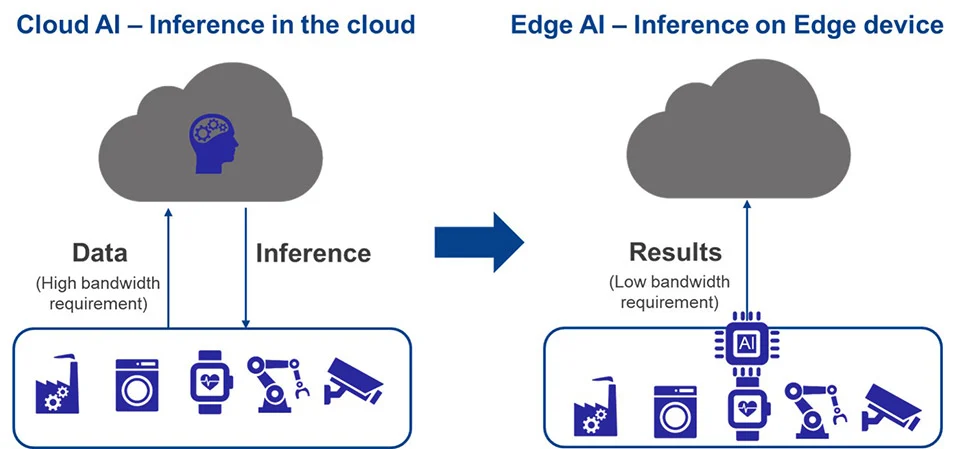

In the rapidly evolving landscape of artificial intelligence, the focus is often dominated by news of massive, cloud-based models from giants like OpenAI, Google DeepMind, and Anthropic. While breakthroughs in training large language models (LLMs) and diffusion models capture headlines, a quieter but equally crucial revolution is happening in AI deployment and inference. The true democratization of AI doesn’t just mean access to powerful APIs; it means empowering developers to run sophisticated models efficiently on their own hardware, from powerful servers to everyday laptops and edge devices. This is where Intel’s OpenVINO™ (Open Visual Inference & Neural Network Optimization) toolkit emerges as a critical enabler, bridging the gap between model development in frameworks like PyTorch and TensorFlow and high-performance, cross-platform deployment.

As AI applications become more integrated into our daily lives, the need for low-latency, cost-effective inference is paramount. Relying solely on cloud infrastructure is not always feasible due to network dependency, privacy concerns, and operational costs. OpenVINO addresses this challenge head-on by providing a unified API and a suite of optimization tools to unlock the full potential of underlying hardware—be it CPUs, integrated GPUs (iGPUs), or dedicated Neural Processing Units (NPUs). Recent developments, including its integration into mainstream operating system repositories, signal a significant milestone in making high-performance AI accessible to a broader community of developers, marking a pivotal moment in the ongoing story of OpenVINO News and the broader AI ecosystem.

Core Concepts: The OpenVINO Workflow Explained

At its heart, OpenVINO is designed to streamline the journey from a trained neural network to an optimized, deployable application. The workflow is centered around two key components: the Model Optimizer and the Inference Engine (now part of the OpenVINO Runtime). Understanding this process is fundamental to leveraging its power.

The Model Optimizer: Your Universal Translator

AI models are created in a variety of frameworks. You might train a computer vision model using TensorFlow, fine-tune a language model from Hugging Face Transformers with PyTorch, or export a model to the Open Neural Network Exchange (ONNX) format for interoperability. The Model Optimizer acts as a universal translator, converting these models into a standardized OpenVINO Intermediate Representation (IR) format. The IR consists of two files: a .xml file describing the network topology and a .bin file containing the weights and biases. During this conversion, the Model Optimizer performs crucial graph optimizations, such as fusing operations, eliminating dead branches, and preparing the model for device-specific execution.

For example, converting an ONNX model is a straightforward command-line operation. This interoperability is a major theme in recent ONNX News, highlighting the importance of standardized formats in a diverse ecosystem that includes tools like TensorRT and frameworks from Meta AI and NVIDIA AI.

# First, ensure you have openvino-dev tools installed

# pip install openvino-dev

# Convert an ONNX model (e.g., mobilenetv2) to OpenVINO IR format

mo --input_model mobilenetv2.onnx --output_dir openvino_model/The OpenVINO Runtime: High-Performance Inference

Once you have the IR files, the OpenVINO Runtime takes over. It’s a C++ library with Python, C, and Java bindings that provides a unified API for running inference across different hardware targets. The runtime automatically detects available hardware (CPU, iGPU, NPU) and uses device-specific plugins to execute the model with maximum efficiency. This “write once, deploy anywhere” philosophy is incredibly powerful, allowing developers to optimize for a target device simply by changing a configuration string, without altering the application logic.

A Practical Guide: Image Classification with OpenVINO

Let’s walk through a practical example of using OpenVINO for an image classification task. We’ll use a pre-trained model converted to the IR format and run inference on a sample image. This example demonstrates the core API and workflow for a typical computer vision application.

Step 1: Setting Up the Environment and Model

First, ensure you have OpenVINO installed. The easiest way is via pip. We also need a model. The Open Model Zoo is an excellent resource, but for this example, we’ll assume you’ve already converted a model like MobileNetV2 to the IR format (model.xml and model.bin).

pip install openvino opencv-python numpyStep 2: Writing the Inference Script

The Python script below outlines the essential steps: initializing the Core object, loading the model, pre-processing the input image, performing inference, and post-processing the results to get the final prediction.

import cv2

import numpy as np

from openvino.runtime import Core

# 1. Initialize OpenVINO Core

core = Core()

# 2. Load the model and compile it for a specific device (e.g., CPU)

# The runtime will automatically select the best device if "AUTO" is used.

model = core.read_model(model="openvino_model/model.xml")

compiled_model = core.compile_model(model=model, device_name="CPU")

# Get input and output nodes

input_layer = compiled_model.input(0)

output_layer = compiled_model.output(0)

# 3. Load and Pre-process the Image

# The model expects a certain input shape, e.g., (1, 3, 224, 224) for N, C, H, W

N, C, H, W = input_layer.shape

image = cv2.imread("path/to/your/image.jpg")

resized_image = cv2.resize(image, (W, H))

# Change data layout from HWC to CHW

input_image = np.expand_dims(resized_image.transpose(2, 0, 1), 0)

# 4. Run Inference

# The compiled_model object is callable

results = compiled_model([input_image])[output_layer]

# 5. Post-process the result

# The output is raw logits; apply softmax and get the top prediction

probs = np.exp(results) / np.sum(np.exp(results))

top_class_id = np.argmax(probs)

# Load labels (e.g., from a imagenet_classes.txt file)

# with open("imagenet_classes.txt", "r") as f:

# labels = [line.strip() for line in f.readlines()]

print(f"Predicted class ID: {top_class_id}")

# print(f"Predicted class name: {labels[top_class_id]}")This simple script forms the foundation for countless AI applications. The same structure can be adapted for object detection, semantic segmentation, or even running models from the Hugging Face Transformers ecosystem for natural language processing tasks.

Advanced Techniques: Unlocking Peak Performance with Quantization

While running a floating-point (FP32) model on a CPU is efficient with OpenVINO, you can achieve significant performance gains by using lower-precision arithmetic, such as 8-bit integers (INT8). This process, known as quantization, reduces the model’s size, memory footprint, and computational requirements, often with a negligible impact on accuracy. This is especially critical for deployment on edge devices with limited resources.

OpenVINO provides the Neural Network Compression Framework (NNCF) for applying post-training quantization (PTQ). PTQ is a powerful technique because it doesn’t require retraining the model. Instead, it analyzes the model’s activations on a small, representative dataset to determine the optimal quantization parameters.

Applying INT8 Quantization with NNCF

The following example demonstrates how to use NNCF to quantize a pre-trained PyTorch model and then convert it to OpenVINO IR format. This workflow is a common practice in MLOps pipelines managed with tools like MLflow or Weights & Biases.

import torch

import torchvision.models as models

import nncf

from nncf.parameters import ModelType

from openvino.tools import mo

# 1. Load a pre-trained PyTorch model

model = models.mobilenet_v2(pretrained=True)

model.eval()

# 2. Create a representative calibration dataset

# This should be a small subset of your training or validation data

# For demonstration, we use random data. In a real scenario, use real images.

calibration_data = [torch.randn(1, 3, 224, 224) for _ in range(100)]

def transform_fn(data_item):

return data_item

calibration_dataset = nncf.Dataset(calibration_data, transform_fn)

# 3. Apply post-training quantization

# The `subset_size` determines how many samples are used for calibration.

quantized_model = nncf.quantize(

model,

calibration_dataset,

model_type=ModelType.TRANSFORMER, # Or ModelType.CNN

subset_size=100

)

# 4. Export the quantized model to ONNX

dummy_input = torch.randn(1, 3, 224, 224)

torch.onnx.export(quantized_model, dummy_input, "quantized_mobilenet.onnx")

# 5. Convert the quantized ONNX model to OpenVINO INT8 IR

# The Model Optimizer will recognize the quantization-aware nodes.

ov_model = mo.convert_model("quantized_mobilenet.onnx")

# You can now run inference with this INT8 model for a significant speedup!

# The inference code remains the same as the previous example.This quantized model will execute significantly faster on CPUs that support instructions like AVX2 and VNNI. This optimization is a key differentiator for OpenVINO and a frequent topic in performance-focused discussions, rivaling other solutions like NVIDIA’s TensorRT for GPU inference.

Best Practices and Ecosystem Integration

To maximize the benefits of OpenVINO, it’s important to follow best practices and understand how it fits into the broader AI and MLOps ecosystem, which includes platforms like AWS SageMaker, Azure Machine Learning, and Vertex AI.

Tips for Optimal Performance

- Use the “AUTO” Device Plugin: For most applications, specifying

device_name="AUTO"incore.compile_model()is the best choice. OpenVINO will intelligently select the most appropriate hardware (e.g., iGPU for latency-sensitive tasks, CPU for throughput) and can even handle automatic failover. - Leverage Asynchronous Inference: For applications that process continuous streams of data (like video analytics), use the asynchronous API. This allows the application to overlap data pre-processing for the next frame while the current frame is being processed by the hardware, maximizing device utilization.

- Tune Performance Hints: OpenVINO allows you to provide performance hints during model compilation, such as

"LATENCY"or"THROUGHPUT". This guides the runtime in optimizing resource allocation, like choosing the number of inference streams. - Match Pre-processing to the Model: Ensure your input data pre-processing (resizing, normalization, color channel ordering) exactly matches what the model was trained on. Mismatches are a common source of poor accuracy.

OpenVINO in the MLOps Lifecycle

OpenVINO is not just an inference engine; it’s a critical component of a modern MLOps pipeline. After a model is trained and tracked using tools like MLflow or Comet ML, an automated CI/CD pipeline can trigger the OpenVINO conversion and quantization process. The resulting IR files can then be versioned and deployed to various environments, from cloud endpoints managed by Azure AI to embedded systems at the far edge. Its integration with popular frameworks and tools like FastAPI or Gradio for building interactive demos makes it a versatile tool for both production and research.

Conclusion: The Future of Accessible AI Inference

The journey of AI from research labs to real-world applications is accelerating, and tools like OpenVINO are paving the way. By providing a robust, high-performance, and hardware-agnostic toolkit, OpenVINO empowers developers to move beyond the constraints of proprietary hardware and expensive cloud APIs. Its focus on optimization, particularly for widely available CPUs and integrated graphics, is a powerful force for democratizing AI. As OpenVINO becomes more deeply integrated into developer ecosystems and operating systems, its role in powering the next generation of intelligent applications—from smart retail and industrial automation to creative tools and on-device assistants—will only continue to grow.

For developers looking to deploy AI models efficiently, exploring the OpenVINO toolkit is no longer just an option; it’s a strategic advantage. Start by converting your favorite TensorFlow, PyTorch, or ONNX model, run your first inference script, and explore the significant performance gains offered by techniques like INT8 quantization. The path to optimized AI deployment is more accessible than ever.