Securing Your MLOps Pipeline: Preventing Sensitive Data Leakage in Azure Machine Learning

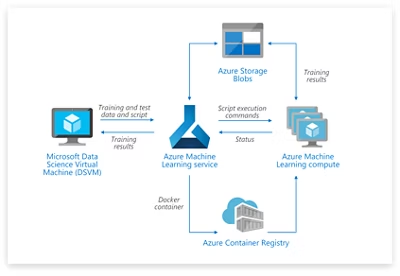

The rapid evolution of machine learning operations (MLOps) has brought powerful platforms like Azure Machine Learning to the forefront, enabling teams to build, train, and deploy models at an unprecedented scale. This complexity, however, introduces new security challenges. Modern MLOps environments are intricate ecosystems, blending managed cloud services with a vast array of open-source components. While this fusion accelerates innovation, it can inadvertently create security blind spots, particularly around the handling of sensitive credentials and access tokens. A misconfigured logging mechanism or an insecure authentication pattern can lead to the unintentional exposure of secrets, compromising data, models, and entire cloud environments.

This article provides a comprehensive technical guide to hardening your MLOps workflows within Azure Machine Learning. We will dissect a common vulnerability pattern—the unintended logging of access tokens—and provide practical, code-driven solutions to mitigate this risk. By embracing secure authentication methods, integrating services like Azure Key Vault, and implementing robust logging practices, you can build a resilient and secure MLOps foundation. This discussion is not just relevant to Azure Machine Learning News; the principles apply broadly across the MLOps landscape, impacting users of AWS SageMaker, Vertex AI, and other platforms that integrate tools like MLflow and various Python libraries.

Understanding the Vulnerability: How Access Tokens Leak into Logs

At its core, the vulnerability stems from a collision between two essential MLOps practices: authentication and logging. Machine learning scripts frequently need to authenticate with various services—Azure Storage for data, a feature store, or an external API for data enrichment. Simultaneously, comprehensive logging is crucial for debugging, monitoring experiments, and ensuring reproducibility. The leak occurs when an object or variable containing a sensitive credential is inadvertently captured by a logging mechanism.

The Anatomy of an Insecure Pattern

Many developers, especially when quickly prototyping, might use explicit credentials like API keys or service principal secrets directly in their code or configuration files. When a logging library is configured to be highly verbose for debugging purposes, it can easily capture and persist these secrets. For instance, a common mistake is to log an entire configuration dictionary or a client object that has been initialized with a raw token.

Consider a hypothetical scenario where a script needs to access an external data service. An insecure approach might look like this:

# WARNING: This is an insecure code example. Do NOT use in production.

import logging

import os

import requests

# Configure basic logging to capture everything

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

# Insecurely loading a secret from an environment variable

# In a real-world leak, this could come from a config file or be hardcoded

API_KEY = os.environ.get("EXTERNAL_DATA_API_KEY")

# A configuration dictionary holding the sensitive key

config = {

"api_endpoint": "https://api.externaldata.com/v1/data",

"api_key": API_KEY,

"timeout": 60

}

# The critical mistake: logging the entire configuration object

logging.info(f"Initializing data client with config: {config}")

def fetch_data(cfg):

try:

headers = {"Authorization": f"Bearer {cfg['api_key']}"}

response = requests.get(cfg['api_endpoint'], headers=headers, timeout=cfg['timeout'])

response.raise_for_status()

return response.json()

except requests.exceptions.RequestException as e:

logging.error(f"Failed to fetch data: {e}")

return None

# The log output from the INFO line above will contain the raw API key,

# exposing it in Azure ML Studio logs, Application Insights, or wherever logs are sent.

data = fetch_data(config)In this example, the line logging.info(f"Initializing data client with config: {config}") directly exposes the api_key. When this script runs as an Azure ML Job, this log output is stored and becomes visible in the experiment’s outputs, potentially accessible to anyone with read permissions on the workspace.

Secure by Design: Implementing Robust Authentication in Azure ML

The most effective way to prevent token leakage is to eliminate the need for your code to handle raw credentials in the first place. Azure provides robust identity and access management (IAM) solutions designed for this purpose. The gold standard for service-to-service authentication within Azure is using Managed Identities.

Leveraging Managed Identities for Credential-Free Authentication

A Managed Identity provides an Azure Active Directory (AAD) identity for an Azure resource, such as an Azure ML Compute Instance or Compute Cluster. When your code runs on that compute, it can use this identity to automatically authenticate with other Azure services that support AAD authentication (like Azure Storage, Azure Key Vault, etc.) without any credentials stored in your code.

The azure-identity library simplifies this process with the DefaultAzureCredential class. This powerful class automatically attempts several authentication methods in a sequence, including checking for a Managed Identity, which is ideal for code running on Azure compute.

Here is how you securely connect to your Azure ML workspace from a script running on an Azure ML compute resource with a Managed Identity enabled:

# Secure way to connect to Azure ML Workspace

import logging

from azure.ai.ml import MLClient

from azure.identity import DefaultAzureCredential

from azure.core.exceptions import CredentialUnavailableError

# Configure logging

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

try:

# DefaultAzureCredential will automatically use the Managed Identity

# of the compute resource where this code is running.

# No secrets or keys are needed in the code.

credential = DefaultAzureCredential()

# Get a handle to the workspace

ml_client = MLClient.from_config(credential=credential)

logging.info(f"Successfully connected to workspace: {ml_client.workspace_name}")

# Now you can use ml_client to interact with Azure ML resources securely

# For example, list datasets

for ds in ml_client.data.list():

logging.info(f"Found dataset: {ds.name}")

except CredentialUnavailableError:

logging.error("Azure credential not available. Ensure Managed Identity is enabled or you are logged in via Azure CLI.")

except Exception as e:

logging.error(f"An unexpected error occurred: {e}")This approach completely removes secrets from your code. The authentication is handled seamlessly and securely by the underlying Azure platform and the identity library. This is a crucial practice highlighted in recent Azure AI News and security bulletins.

Advanced Techniques: Managing External Secrets and Sanitizing Logs

While Managed Identities are perfect for Azure-to-Azure communication, MLOps workflows often require access to third-party services that don’t support AAD authentication. For these scenarios, you must handle secrets, but you can do so securely using Azure Key Vault.

Centralizing Secrets with Azure Key Vault

Azure Key Vault is a managed service for securely storing and accessing secrets, keys, and certificates. Instead of placing a third-party API key in an environment variable or config file, you store it in Key Vault and grant your Azure ML compute’s Managed Identity permission to read it.

Your training script can then fetch the secret from Key Vault at runtime. This centralizes secret management and provides a full audit trail of when secrets are accessed.

# Securely fetching a third-party API key from Azure Key Vault

import logging

import os

from azure.identity import DefaultAzureCredential

from azure.keyvault.secrets import SecretClient

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

# These should be configured as environment variables in your Azure ML Job

KEY_VAULT_NAME = os.environ.get("KEY_VAULT_NAME")

SECRET_NAME = os.environ.get("SECRET_NAME_FOR_API")

if not KEY_VAULT_NAME or not SECRET_NAME:

logging.error("KEY_VAULT_NAME and SECRET_NAME_FOR_API environment variables must be set.")

else:

try:

# Use the same credential-free approach

credential = DefaultAzureCredential()

# Create a SecretClient to connect to the specific Key Vault

key_vault_uri = f"https://{KEY_VAULT_NAME}.vault.azure.net/"

secret_client = SecretClient(vault_url=key_vault_uri, credential=credential)

# Fetch the secret at runtime

logging.info(f"Fetching secret '{SECRET_NAME}' from Key Vault '{KEY_VAULT_NAME}'...")

retrieved_secret = secret_client.get_secret(SECRET_NAME)

api_key = retrieved_secret.value

logging.info("Successfully retrieved secret. Using it to call external API.")

# Now use the `api_key` to make your API call.

# CRITICAL: Be careful not to log the `api_key` variable itself from this point on.

except Exception as e:

logging.error(f"Failed to retrieve secret from Key Vault: {e}")Implementing Custom Logging Filters for Proactive Redaction

Even with the best intentions, a sensitive value might still find its way into a log message, perhaps from an exception traceback or a third-party library’s verbose output. As a final layer of defense, you can implement custom logging filters to redact sensitive patterns before they are written.

This involves creating a filter class that uses regular expressions to find and replace patterns that look like keys or tokens. This is a powerful technique relevant to anyone using Python for MLOps, whether their stack includes PyTorch, TensorFlow, or frameworks like LangChain.

# Implementing a custom logging filter to redact sensitive information

import logging

import re

class RedactingFilter(logging.Filter):

"""A logging filter that redacts sensitive patterns from log records."""

def __init__(self, patterns):

super().__init__()

self._patterns = [(re.compile(p), "[REDACTED]") for p in patterns]

def filter(self, record):

# Apply redaction to the formatted log message

record.msg = self._redact(record.getMessage())

# To be safe, also check and redact args if they exist

if record.args:

record.args = tuple(self._redact(arg) if isinstance(arg, str) else arg for arg in record.args)

return True

def _redact(self, message):

if not isinstance(message, str):

return message

for pattern, replacement in self._patterns:

message = pattern.sub(replacement, message)

return message

# --- Usage Example ---

# Define regex patterns for things that look like secrets

# This is an example; tailor patterns to your specific secret formats

REDACTION_PATTERNS = [

r"[a-zA-Z0-9]{20,}", # General high-entropy strings

r"key-[a-zA-Z0-9]{16,}", # A hypothetical key format

r"\"api_key\":\s*\"[^\"]+\"" # JSON key-value pair for "api_key"

]

# Get the root logger and add our custom filter

logger = logging.getLogger()

logger.setLevel(logging.INFO)

logger.addFilter(RedactingFilter(REDACTION_PATTERNS))

# Set up a handler to see the output

handler = logging.StreamHandler()

formatter = logging.Formatter('%(asctime)s - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

logger.addHandler(handler)

# --- Test the filter ---

secret_key = "key-a1b2c3d4e5f6g7h8i9j0"

config_dict = {"user": "test", "api_key": secret_key}

logging.info(f"This is a dangerous log with a secret: {secret_key}")

logging.info(f"Logging a config dict: {config_dict}")

# Expected output will show "[REDACTED]" instead of the actual secrets.Best Practices for a Secure MLOps Lifecycle

Securing your Azure ML environment is an ongoing process, not a one-time fix. It requires a combination of technology, process, and awareness. Here are key best practices to integrate into your MLOps lifecycle, reflecting trends seen in MLflow News, NVIDIA AI News, and the broader MLOps community.

- Embrace the Principle of Least Privilege: Ensure that Managed Identities for compute resources and Service Principals for automation have only the minimum permissions required. For example, a training job might only need read access to a specific storage container, not contributor rights to the entire storage account.

- Regularly Audit Logs and Access: Use Azure Monitor and Log Analytics to create alerts for suspicious activities. Regularly review who has access to your Azure ML workspace and the associated logs. Look for unexpected log patterns or access from unusual locations.

- Conduct Dependency Scanning: Your Python environment is part of your security surface. Use tools like GitHub Dependabot, Snyk, or pip-audit to scan your

requirements.txtorconda.yamlfiles for open-source packages with known vulnerabilities. This is critical news for users of popular libraries from Hugging Face, OpenAI, and the scientific computing stack. - Secure Your Data in Transit and at Rest: Use Azure’s built-in features to enforce encryption for data at rest in storage accounts and ensure all communication with Azure services uses TLS (HTTPS). Configure Azure ML workspaces within a Virtual Network (VNet) for an added layer of network isolation.

- Educate Your Team: The human element is often the weakest link. Train data scientists and ML engineers on secure coding practices, emphasizing the dangers of handling credentials directly in code and the benefits of using Managed Identities and Key Vault.

Conclusion: Building a Proactive Security Culture in MLOps

The unintentional logging of sensitive access tokens in complex environments like Azure Machine Learning is a serious but preventable risk. By moving away from explicit credential handling and embracing a credential-free workflow with Managed Identities, you can eliminate the root cause of this vulnerability. For the rare cases where secrets are unavoidable, Azure Key Vault provides a secure, centralized, and auditable solution for their management.

Furthermore, implementing defensive layers like custom logging filters provides a safety net to catch and redact sensitive data that might otherwise slip through. Ultimately, securing your MLOps pipeline requires a cultural shift towards proactive security. By embedding best practices—least privilege, regular auditing, and continuous education—into your daily operations, you can harness the power of platforms like Azure Machine Learning to innovate rapidly and securely. The latest Azure Machine Learning News is not just about new features, but also about the growing maturity and security of the platform, a responsibility shared by both the provider and the user.