OpenAI Weights on SageMaker: Hell Froze Over

Honestly, I had to check the URL three times. Then I checked the SSL certificate. Then I texted a buddy at Amazon to ask if their marketing team had gone rogue. Because seeing “OpenAI” and “SageMaker” in the same sentence—without the word “competitor” in between—feels like a glitch in the simulation.

But here we are. It’s February 2026, and the Azure exclusivity wall just developed a massive, load-bearing crack. As of this morning, we can deploy OpenAI open weights directly on AWS SageMaker. Not an API proxy through Bedrock (though that’s there too), but actual model weights running on our own managed compute.

I spent the last six hours messing around with this in us-east-1, burning through my monthly compute budget just to see if it’s real. And it is. Weirdly good, too.

Why This Matters (And Why I’m Confused)

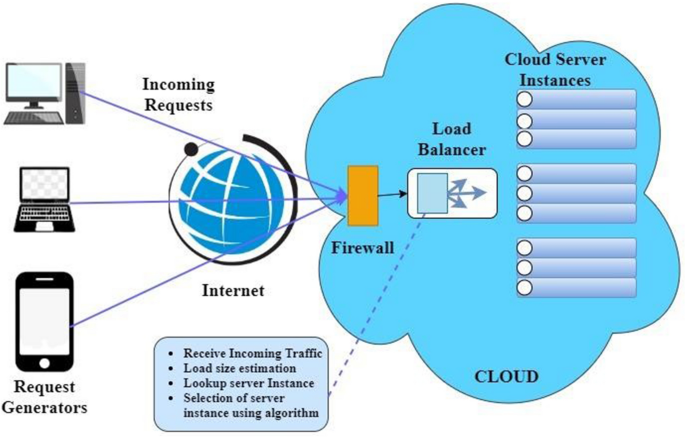

For the last three years, my architecture diagrams have looked like a mess of spaghetti. We keep our data in S3, run compute on EC2, but route all our “smart” calls to Azure OpenAI endpoints via a VPN gateway that flakes out every Tuesday. It was the cost of doing business if you wanted GPT-class reasoning. But now? I can just host the distilled OpenAI weights on a ml.g6.12xlarge instance next to my application servers. No cross-cloud latency. No egress fees to Microsoft. Just local inference.

I’m guessing the antitrust regulators finally got loud enough, or maybe Satya and Andy Jassy decided to bury the hatchet over a very expensive lunch. Either way, we engineers win this one.

Getting It Running: A Quick & Dirty Test

The process is almost identical to deploying a Hugging Face model, which is a relief. You don’t need a custom Docker container. AWS published a new deep learning container (DLC) specifically optimized for these weights. Here’s the snippet that worked for me after I fixed a permission error with my execution role:

import sagemaker

from sagemaker.model import Model

from sagemaker import get_execution_role

role = get_execution_role()

# The new image URI for OpenAI optimized inference

# Make sure you're in a region that supports the g6 family

image_uri = sagemaker.image_uris.retrieve(

framework="openai-inference",

region="us-east-1",

version="1.0.0",

instance_type="ml.g6.12xlarge"

)

# Pointing to the S3 bucket where the open weights are staged

# Note: You have to accept the EULA in Marketplace first!

# I wasted 20 mins figuring that out.

model_data = "s3://aws-models-reference/openai-open-weights/gpt-4o-mini-distilled.tar.gz"

model = Model(

image_uri=image_uri,

model_data=model_data,

role=role,

name="openai-distilled-endpoint-v1"

)

# Deploying to a real-time endpoint

predictor = model.deploy(

initial_instance_count=1,

instance_type="ml.g6.12xlarge",

container_startup_health_check_timeout=600

)

print("Endpoint is live. Finally.")Benchmarks: The “Open” Tax?

Usually, when a proprietary model goes “open,” performance takes a hit. They trim the weights, quantize it aggressively, or hold back the good fine-tuning datasets. I was skeptical.

But the results surprised me. I ran a standard RAG evaluation dataset (about 500 queries involving technical documentation) against this new SageMaker endpoint and compared it to my existing Llama-4-70B setup. The OpenAI model handled ambiguous instruction following noticeably better. On queries where Llama 4 usually hallucinates a generic answer, the OpenAI model asked clarifying questions or gave a more nuanced “I don’t know.”

The Gotcha: It’s Not “Open Source”

Let’s be real—”Open Weight” is marketing speak. I read the license file included in the tarball (yes, I’m that person). It’s restrictive. You can use it for commercial inference, sure, but you can’t use the outputs to train other models, and there are strict audit clauses.

But for 90% of enterprise use cases? This is fine. Just remember to check your instance quotas before you get excited. I’m already seeing InsufficientInstanceCapacity errors in us-east-1 this afternoon. Everyone is trying this at once.

My Take

I never thought I’d see the day where I could pip install sagemaker and push an OpenAI model. It simplifies my stack immensely. I can finally kill that flaky VPN gateway to Azure.