Unlocking Multimodal Reasoning: A Deep Dive into the New Wave of Thinking Models on Hugging Face

Introduction

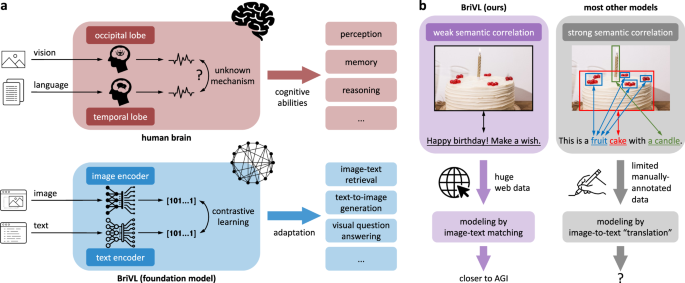

The landscape of artificial intelligence is undergoing a seismic shift, moving rapidly beyond text-only paradigms into a rich, multimodal era. While Large Language Models (LLMs) have dominated the headlines for the past two years, the current frontier is defined by Large Multimodal Models (LMMs) that can perceive, reason, and generate content across text, images, and video. Specifically, a new class of “Thinking” models is emerging within Hugging Face News, characterized by their ability to employ Chain-of-Thought (CoT) reasoning directly on visual inputs.

Recent developments in the open-source community have introduced lightweight yet potent architectures—specifically in the 20B to 30B parameter range—that challenge the dominance of proprietary giants. These models are not merely image captioners; they are reasoning engines capable of analyzing complex charts, solving visual math problems, and understanding nuance in photography. This evolution is critical for developers and data scientists tracking PyTorch News and TensorFlow News, as it signals a democratization of high-level visual reasoning capabilities.

In this comprehensive guide, we will explore the architecture of these new “Thinking” multimodal models, demonstrate how to implement them using the Hugging Face ecosystem, and discuss optimization strategies for deployment. Whether you are following Google DeepMind News or the latest updates from Meta AI News, understanding how to leverage these open-source breakthroughs is essential for building the next generation of AI applications.

Section 1: The Architecture of “Thinking” Multimodal Models

From VQA to Visual Reasoning

Traditionally, Visual Question Answering (VQA) models operated on a relatively simple mechanism: encode the image, encode the text, and fuse them to predict an answer. However, these older architectures often struggled with complex logic. The latest wave of models, often highlighted in Hugging Face Transformers News, introduces a “System 2” thinking process. This mimics human cognition by breaking down a visual problem into intermediate steps before concluding.

For instance, when presented with an image of a physics problem, a standard model might guess the answer. A “Thinking” model, however, will generate an internal monologue: identifying the variables in the diagram, recalling the relevant formula, and calculating the result step-by-step. This approach is heavily influenced by techniques seen in OpenAI News and Anthropic News regarding text-based reasoning, now applied to the visual domain.

Core Components

The architecture of these trending models typically consists of three main pillars:

- Vision Encoder: Often based on ViT (Vision Transformer) or SigLIP, responsible for converting raw pixels into semantic embeddings.

- LLM Backbone: A dense language model (often ranging from 7B to 30B parameters) that acts as the “brain.”

- The Connector: A sophisticated projection layer (like an MLP or Q-Former) that aligns visual tokens with the LLM’s text space.

What sets the new 28B-class models apart is the integration of specific “reasoning tokens” or training methodologies that enforce CoT generation. This is a significant topic in JAX News and Keras News circles, where efficient training of these connectors is a primary research focus.

Basic Setup and Environment

To interact with these models, you need a robust environment. We will utilize the Hugging Face transformers library. Ensure you have the latest version, as support for new architectures is added frequently.

Artificial intelligence analyzing image – Convergence of artificial intelligence with social media: A …

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, AutoProcessor

from PIL import Image

import requests

# Check for GPU availability - Essential for 28B+ models

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Device set to: {device}")

# Example configuration for a generic high-performance VLM

# We use 'trust_remote_code=True' as many new thinking models require custom modeling code

model_id = "generic-thinking-vlm-28b-preview"

def setup_environment():

try:

# Verify CUDA and memory availability

if device == "cuda":

vram = torch.cuda.get_device_properties(0).total_memory / 1e9

print(f"Available VRAM: {vram:.2f} GB")

if vram < 24:

print("Warning: 28B models typically require >48GB VRAM in FP16. Consider quantization.")

except Exception as e:

print(f"Environment setup warning: {e}")

setup_environment()Section 2: Implementation and Inference

Loading the Model

Implementing a multimodal reasoning model requires careful memory management. A 28B parameter model in half-precision (FP16) requires approximately 56GB of VRAM. For those without enterprise-grade hardware (like H100s discussed in NVIDIA AI News), leveraging accelerate for device mapping or bitsandbytes for quantization is mandatory.

Below is a practical example of loading a multimodal model capable of reasoning. We will simulate the interaction with a model that supports “thinking” tags or prompts.

from transformers import BitsAndBytesConfig

# 1. Configure Quantization to fit in consumer hardware (e.g., dual 3090s or A6000)

quantization_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_compute_dtype=torch.float16,

bnb_4bit_quant_type="nf4"

)

# 2. Load the Processor (handles image resizing and text tokenization)

processor = AutoProcessor.from_pretrained(

"organization/thinking-vlm-28b",

trust_remote_code=True

)

# 3. Load the Model with Quantization

model = AutoModelForCausalLM.from_pretrained(

"organization/thinking-vlm-28b",

quantization_config=quantization_config,

device_map="auto", # Automatically spreads layers across GPUs

trust_remote_code=True

).eval()

print("Model loaded successfully with 4-bit quantization.")Executing a “Thinking” Inference

The magic happens in the prompt engineering. Unlike standard image captioning, we want to trigger the model’s reasoning capabilities. We pass an image and a complex query. This workflow is compatible with tools often mentioned in LangChain News and LlamaIndex News for building agents.

def run_multimodal_inference(image_path, user_prompt):

# Load image

raw_image = Image.open(image_path).convert('RGB')

# Construct a prompt that encourages Chain of Thought

# Some models use specific tokens like <thinking>, others respond to natural language

prompt = f"<image>\nUser: {user_prompt}\nAssistant: Let's think step by step to solve this."

# Process inputs

inputs = processor(

text=prompt,

images=raw_image,

return_tensors="pt"

).to(device)

# Generate response

with torch.no_grad():

generated_ids = model.generate(

**inputs,

max_new_tokens=512,

do_sample=True,

temperature=0.4, # Lower temperature for more logical reasoning

top_p=0.9

)

# Decode

generated_text = processor.batch_decode(

generated_ids,

skip_special_tokens=True

)[0]

return generated_text

# Example Usage

# image_path = "complex_chart.jpg"

# response = run_multimodal_inference(image_path, "Analyze the trend in this chart and predict the next quarter.")

# print(response)In this snippet, the prompt “Let’s think step by step” is crucial. It aligns with the findings in Google DeepMind News regarding CoT prompting, significantly improving accuracy on math and logic tasks embedded within images.

Section 3: Advanced Techniques and Optimization

Serving with vLLM

For production environments, using raw PyTorch inference loops is inefficient. vLLM News has been dominating the optimization conversation recently. vLLM is a high-throughput and memory-efficient library for LLM serving. Recent updates have expanded vLLM support to multimodal models, drastically reducing latency.

Integrating vLLM allows for continuous batching and PagedAttention, which is vital if you are building an application scaling to thousands of users, perhaps hosted on AWS SageMaker News or Azure Machine Learning News infrastructure.

# Conceptual example of serving a VLM with vLLM

# Note: Ensure vllm is installed via pip install vllm

from vllm import LLM, SamplingParams

def initialize_vllm_engine():

# vLLM handles memory management internally

llm = LLM(

model="organization/thinking-vlm-28b",

trust_remote_code=True,

tensor_parallel_size=2, # Use 2 GPUs

gpu_memory_utilization=0.90

)

return llm

def batch_inference(llm_engine, prompts, images):

sampling_params = SamplingParams(temperature=0.2, max_tokens=1024)

# vLLM specific input formatting for multimodal

# This structure varies slightly based on vLLM version updates

inputs = []

for p, img in zip(prompts, images):

inputs.append({

"prompt": p,

"multi_modal_data": {"image": img}

})

outputs = llm_engine.generate(inputs, sampling_params)

for output in outputs:

print(f"Generated: {output.outputs[0].text}")

# This approach is significantly faster than standard Transformers generate()Integration with Vector Databases (RAG)

To reduce hallucinations—a common issue discussed in Cohere News and Mistral AI News—it is best practice to couple these “Thinking” models with Retrieval-Augmented Generation (RAG). By embedding image descriptions or extracting text from images and storing them in vector databases like those featured in Pinecone News, Milvus News, or Qdrant News, you can ground the model’s reasoning in retrieved facts.

Artificial intelligence analyzing image – Artificial Intelligence Tags – SubmitShop

For example, if the model is analyzing a technical schematic, retrieving the official manual pages from a Weaviate instance and feeding that text alongside the image into the context window allows the model to “think” with reference material in hand.

Section 4: Best Practices and Ecosystem Landscape

Evaluation and Monitoring

Deploying these models requires rigorous testing. You cannot rely solely on visual inspection. Tools highlighted in MLflow News, Weights & Biases News, and Comet ML News are essential for tracking experiments. For multimodal evaluation, consider using benchmarks like MMBench or MathVista.

Furthermore, LangSmith News has highlighted the importance of tracing chains of thought. When a model outputs a reasoning path, log it. If the final answer is wrong, analyzing the reasoning trace allows you to fine-tune the specific step where logic failed.

The Open Source vs. Proprietary Balance

While OpenAI News often focuses on GPT-4V, and Google DeepMind News on Gemini, the open-source community is catching up. Models like the one inspiring this article (trending on Hugging Face) offer data privacy and customization that APIs cannot match. This is particularly relevant for sectors like healthcare or finance, where data cannot leave the premise.

However, running a 28B model requires significant compute. For those without local GPUs, serverless options like Modal News, Replicate News, or RunPod News offer excellent alternatives to host these specific Hugging Face models on-demand.

Artificial intelligence analyzing image – Artificial intelligence in healthcare: A bibliometric analysis …

Optimization Libraries

Don’t ignore the lower-level optimizations. DeepSpeed News frequently covers Zero Redundancy Optimizer (ZeRO) techniques which can help fine-tune these models on limited hardware. Similarly, ONNX News and TensorRT News are making strides in compiling multimodal graphs for faster inference, though support for the very latest architectures often lags slightly behind PyTorch.

Conclusion

The release of high-performance, open-source multimodal reasoning models marks a pivotal moment in AI development. We are moving away from simple pattern matching toward genuine visual understanding and logic. As highlighted by the trends in Hugging Face News, the community is rapidly adopting architectures that can “think” before they speak.

For developers, the toolkit is expanding. From LlamaFactory News for fine-tuning to Gradio News and Streamlit News for building rapid prototypes, the barrier to entry is lowering even as the models become more complex. By leveraging quantization, efficient serving with vLLM, and sound architectural patterns, you can deploy these powerful 28B-parameter reasoning engines today.

As you experiment with these models, keep an eye on AutoML News and Optuna News for hyperparameter tuning, and stay updated with Stability AI News for advancements in image generation that may soon merge with these reasoning capabilities. The future of AI is not just about reading text; it’s about seeing and understanding the world, one reasoning step at a time.