Unlocking Gemini 2.5 Pro: Building Scalable Multimodal Pipelines with Go

Introduction: A New Era in Open Source AI

The landscape of artificial intelligence has just witnessed a seismic shift. With the latest Google DeepMind News, the release of Gemini 2.5 Pro as an open-source model under the Apache 2.0 license marks a turning point in how developers approach multimodal applications. For years, the industry has oscillated between closed-source APIs and smaller open-weight models. However, the introduction of a model boasting a massive 2 million token context window, fully multimodal capabilities from day one, and permissible licensing changes the calculus for enterprise engineering.

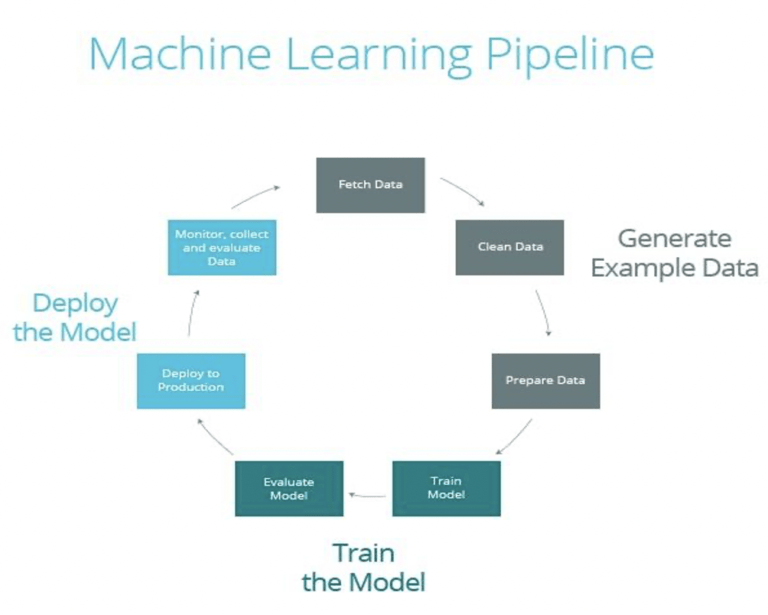

While data scientists consuming TensorFlow News and PyTorch News are eager to fine-tune these weights, backend engineers face a different challenge: how to build performant, scalable infrastructure that can handle the massive throughput required by such a powerful model. This is where the Go programming language (Golang) shines. Known for its concurrency model and robust standard library, Go is the ideal language for orchestrating the complex pipelines required to feed a 2M token context window efficiently.

In this article, we will explore how to leverage the power of Gemini 2.5 Pro within a Go-based architecture. We will move beyond simple Python scripts and dive into building production-grade systems using Go’s interfaces, goroutines, and channels. We will also touch upon how this integrates with the broader ecosystem, including LangChain News, LlamaIndex News, and vector databases like Milvus News and Pinecone News.

Section 1: Structuring Multimodal Inputs with Go Interfaces

One of the headline features of Gemini 2.5 Pro is its native multimodal capability. Unlike previous iterations that patched together vision and language models, this architecture processes text, images, and video natively. For a developer tracking OpenAI News or Anthropic News, the shift to native multimodality requires a rethink of data structures.

In dynamic languages like Python, handling mixed input types is often done with loose dictionaries. However, to build a robust system that integrates with Hugging Face News libraries or local inference servers (like those discussed in vLLM News), we need strict typing to prevent runtime errors. Go’s interface system allows us to create a polymorphic input processor that can handle the complexity of Gemini 2.5’s input requirements.

Below is an example of how to define a robust input system for a multimodal agent. This code demonstrates how to use interfaces to standardize different data modalities before sending them to the model inference engine.

package main

import (

"encoding/base64"

"fmt"

"io/ioutil"

)

// ModalityType defines the type of input data

type ModalityType string

const (

Text ModalityType = "text"

Image ModalityType = "image"

Video ModalityType = "video"

)

// InputData represents a generic input for Gemini 2.5 Pro

type InputData interface {

GetType() ModalityType

Process() (string, error) // Returns processed payload (e.g., base64 or raw text)

}

// TextPrompt handles standard instruction tuning inputs

type TextPrompt struct {

Content string

}

func (t TextPrompt) GetType() ModalityType {

return Text

}

func (t TextPrompt) Process() (string, error) {

// In a real scenario, this might involve token counting or sanitization

return t.Content, nil

}

// ImageInput handles visual data processing

type ImageInput struct {

FilePath string

}

func (i ImageInput) GetType() ModalityType {

return Image

}

func (i ImageInput) Process() (string, error) {

// Read image and convert to Base64 for API transmission

bytes, err := ioutil.ReadFile(i.FilePath)

if err != nil {

return "", err

}

return base64.StdEncoding.EncodeToString(bytes), nil

}

// Processor handles a batch of multimodal inputs

func ProcessBatch(inputs []InputData) {

fmt.Println("Preparing context for Gemini 2.5 Pro...")

for _, input := range inputs {

data, err := input.Process()

if err != nil {

fmt.Printf("Error processing %s: %v\n", input.GetType(), err)

continue

}

fmt.Printf("Processed %s input: %d bytes\n", input.GetType(), len(data))

}

}

func main() {

// Simulating a multimodal request

batch := []InputData{

TextPrompt{Content: "Analyze the architectural style of this building."},

ImageInput{FilePath: "./architecture.jpg"},

}

ProcessBatch(batch)

}This approach ensures that whether you are dealing with Stability AI News for image generation or Cohere News for embedding retrieval, your Go application maintains a strict contract on how data is ingested. This is critical when managing the 2M token context, as malformed binary data can silently fail or consume unnecessary tokens.

Section 2: Concurrency and Context Loading with Goroutines

The 2 million token context window is a game-changer, eclipsing limitations discussed in recent Mistral AI News and Meta AI News. However, filling a 2M token window is an I/O bound operation. If you are retrieving documents from a vector database (relevant to Weaviate News, Chroma News, or Qdrant News) sequentially, the latency will be unacceptable before the model even begins inference.

Go’s goroutines provide a lightweight mechanism to fetch and preprocess data in parallel. This is essential for RAG (Retrieval-Augmented Generation) pipelines where you might need to pull data from Snowflake Cortex News data warehouses, AWS SageMaker News feature stores, and local file systems simultaneously to construct the prompt.

The following example demonstrates using `sync.WaitGroup` to concurrently fetch context data from multiple sources to populate the Gemini 2.5 Pro context window efficiently.

package main

import (

"fmt"

"sync"

"time"

)

// ContextSource represents a data source for the model

type ContextSource struct {

Name string

URI string

}

// FetchContext simulates retrieving data from external sources (Vector DBs, APIs)

func FetchContext(source ContextSource, wg *sync.WaitGroup, results chan<- string) {

defer wg.Done()

fmt.Printf("Starting fetch from %s...\n", source.Name)

// Simulate network latency (e.g., querying Milvus or Pinecone)

time.Sleep(time.Millisecond * 500)

// Mocked data return

data := fmt.Sprintf("Context data from %s (URI: %s)", source.Name, source.URI)

results <- data

}

func BuildMassiveContext() {

sources := []ContextSource{

{"VectorDB_Shard1", "tcp://milvus-cluster:19530"},

{"KnowledgeGraph", "http://neo4j-instance:7474"},

{"DocumentStore", "s3://corporate-docs/2024"},

{"CodeRepository", "git://internal-repo/backend"},

}

var wg sync.WaitGroup

// Buffered channel to prevent blocking if consumption is slow

contextChannel := make(chan string, len(sources))

start := time.Now()

// Launch a goroutine for each data source

for _, src := range sources {

wg.Add(1)

go FetchContext(src, &wg, contextChannel)

}

// Wait for all fetches to complete in a separate goroutine to close channel

go func() {

wg.Wait()

close(contextChannel)

}()

// Aggregate results

var fullContext string

for data := range contextChannel {

fullContext += data + "\n"

}

elapsed := time.Since(start)

fmt.Printf("Constructed context in %s. Total Length: %d characters.\n", elapsed, len(fullContext))

fmt.Println("Ready to send to Gemini 2.5 Pro Inference Engine.")

}

func main() {

BuildMassiveContext()

}This pattern is vital. While JAX News and NVIDIA AI News focus on the speed of matrix multiplication on GPUs, the bottleneck for end-to-end application performance is often the data engineering pipeline. By parallelizing the context assembly, Go ensures that the GPU is not sitting idle waiting for data.

Section 3: Streaming Responses with Channels

With a large context window comes the potential for long, detailed responses. Waiting for the entire generation to complete before sending a response to the user is poor UX. Modern applications, influenced by standards seen in ChatGPT (OpenAI News) and Perplexity, demand real-time streaming.

Go channels are natively designed for this producer-consumer pattern. Whether you are wrapping the model via Ollama News locally or connecting to a Vertex AI News endpoint, handling the stream of tokens requires a non-blocking approach. This is particularly relevant when integrating with frontend frameworks or tools discussed in Streamlit News and Chainlit News.

Here is how to implement a streaming token handler that decouples the model's generation speed from the client's consumption speed.

package main

import (

"fmt"

"math/rand"

"time"

)

// Token represents a single unit of output from the LLM

type Token struct {

ID int

Content string

IsLast bool

}

// GeminiStreamSimulator mimics the streaming API of the model

func GeminiStreamSimulator(prompt string, stream chan<- Token) {

// Simulate processing delay

time.Sleep(1 * time.Second)

response := []string{"Gemini", " 2.5", " Pro", " is", " analyzing", " your", " multimodal", " data", "."}

for i, word := range response {

// Simulate variable token generation time

time.Sleep(time.Duration(rand.Intn(100)) * time.Millisecond)

isLast := i == len(response)-1

stream <- Token{

ID: i,

Content: word,

IsLast: isLast,

}

}

close(stream)

}

// ClientConsumer represents a WebSocket or HTTP handler sending data to frontend

func ClientConsumer(stream <-chan Token) {

fmt.Println("--- Stream Started ---")

for token := range stream {

// In a real app, this would write to an http.ResponseWriter

fmt.Printf("%s", token.Content)

// Flush logic could go here

}

fmt.Println("\n--- Stream Ended ---")

}

func main() {

// The channel acts as the pipe between the AI model and the user

tokenStream := make(chan Token)

// Start the model inference in a background routine

go GeminiStreamSimulator("Explain quantum computing", tokenStream)

// Block main thread while consuming the stream

ClientConsumer(tokenStream)

}This architecture is crucial for keeping memory footprints low. Instead of buffering a 5,000-word essay in memory, Go processes it token by token. This efficiency is why Go is often chosen over Python for the inference serving layer, even if the model training (covered in Fast.ai News and Kaggle News) happens in Python.

Section 4: Integration and Best Practices in the MLOps Ecosystem

Deploying Gemini 2.5 Pro involves more than just the model and the code. It requires a robust MLOps strategy. As the model is open source, you might be running it on RunPod News or Modal News infrastructure, or perhaps orchestrating it via Ray News. Regardless of the hosting, observability is key.

Observability and Tracing

When chaining calls (as popularized by LangChain News), you must trace execution. Integrating tools like Weights & Biases News, Comet ML News, or MLflow News is standard. In Go, we can use middleware patterns to wrap our model interactions for logging and metrics.

Handling 2M Context Costs and Latency

Just because the model supports 2M tokens doesn't mean you should always use them. Processing 2M tokens requires significant compute (DeepSpeed News and TensorRT News are relevant here for optimization).

- Caching: Implement semantic caching using Redis or FAISS News to store results of common queries.

- Context Optimization: Use summarization techniques (often discussed in Sentence Transformers News) to compress history before feeding it to Gemini.

- Graceful Degradation: If the model server is overloaded, use Go's `context` package to set strict timeouts and fallback to smaller models or cached responses.

package main

import (

"context"

"fmt"

"time"

)

// InferenceService defines the contract for model interaction

type InferenceService interface {

Predict(ctx context.Context, input string) (string, error)

}

type GeminiService struct{}

func (g *GeminiService) Predict(ctx context.Context, input string) (string, error) {

// Simulate a long-running inference task

select {

case <-time.After(2 * time.Second): // Simulate 2s processing

return "Analysis complete based on 2M token context.", nil

case <-ctx.Done():

return "", ctx.Err()

}

}

func main() {

svc := &GeminiService{}

// Set a strict timeout for the request

// This is critical in production to prevent hanging connections

ctx, cancel := context.WithTimeout(context.Background(), 1500*time.Millisecond)

defer cancel()

fmt.Println("Sending request to Gemini 2.5 Pro...")

result, err := svc.Predict(ctx, "Analyze this massive dataset")

if err != nil {

fmt.Println("Error:", err)

fmt.Println("Fallback: Switching to smaller model or cached response.")

} else {

fmt.Println("Response:", result)

}

}This snippet highlights the importance of the `context` package in Go. In the world of Azure Machine Learning News and DataRobot News, resource management is money. If a request hangs, Go allows you to kill the connection immediately, freeing up resources for other users.

Conclusion

The release of Gemini 2.5 Pro as an open-source, multimodal powerhouse is arguably the most significant update in Google DeepMind News this year. It challenges the dominance of closed APIs and empowers developers to build deeply integrated, private, and scalable AI solutions. While the model weights might be trained using frameworks familiar to readers of PyTorch News, the production deployment of such massive models demands the performance and concurrency that only Go can provide.

By utilizing Go's interfaces for multimodal polymorphism, goroutines for parallel context loading, and channels for real-time streaming, developers can build pipelines that fully exploit the 2M token context window without sacrificing user experience. As the ecosystem evolves—with tools like LangSmith News and LlamaFactory News emerging to support these workflows—the combination of state-of-the-art open models and high-performance systems programming will define the next generation of AI applications.

The barrier to entry has been lowered. The weights are available. The context is massive. It is now up to the engineering community to build the infrastructure that brings Gemini 2.5 Pro to life.