Scaling Vector Search: Architecting High-Performance AI with Qdrant and Cloud-Native Infrastructure

Introduction: The New Era of AI Infrastructure

The landscape of Artificial Intelligence is undergoing a seismic shift, moving from experimental notebooks to massive, production-grade deployments. At the heart of this transformation lies the need for efficient information retrieval. As Generative AI models continue to grow in complexity, the ability to provide them with relevant, real-time context—known as Retrieval-Augmented Generation (RAG)—has become paramount. This is where Qdrant News and updates regarding vector database technology become critical for developers and data architects.

Recent developments in the ecosystem highlight a growing trend: the convergence of specialized cloud infrastructure with high-performance vector search engines. While OpenAI News often dominates the headlines with new model capabilities, the underlying infrastructure that powers these models is equally important. Without a robust retrieval layer, even the most advanced Large Language Models (LLMs) suffer from hallucinations and a lack of domain-specific knowledge.

This article explores the technical synergy between cloud-native computing and Qdrant, a leading open-source vector database written in Rust. We will delve into how leveraging optimized cloud instances—ranging from high-frequency CPUs to GPU-accelerated compute—can supercharge your vector search capabilities. We will also examine the broader AI landscape, touching upon Anthropic News, Mistral AI News, and Google DeepMind News, to understand how modern vector stores serve as the long-term memory for these diverse intelligence engines.

Section 1: Core Concepts of High-Performance Vector Search

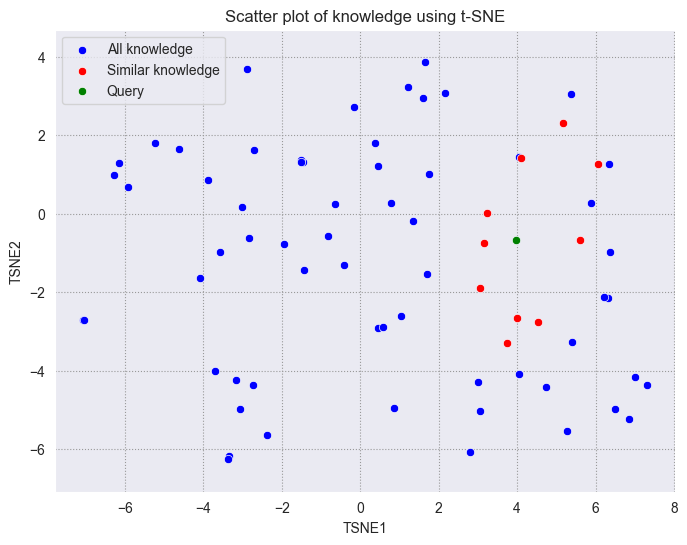

To understand why infrastructure choices matter for vector databases, one must first grasp the computational demands of high-dimensional vector search. Unlike traditional relational databases that rely on exact keyword matching, vector databases perform semantic similarity searches using algorithms like Hierarchical Navigable Small World (HNSW).

The Architecture of Qdrant

Qdrant distinguishes itself in the Milvus News, Pinecone News, and Weaviate News discussions by offering a unique combination of performance and flexibility. Built in Rust, it provides memory safety and low-level resource control, which is essential when managing billions of vectors. However, the software is only as good as the hardware it runs on. The efficiency of the HNSW graph traversal is heavily dependent on Random Access Memory (RAM) availability and memory bandwidth.

When deploying Qdrant on cloud infrastructure, the goal is to minimize latency. This involves selecting instances that offer NVMe storage for fast persistence and high-throughput networking for distributed deployments. This is particularly relevant given recent NVIDIA AI News regarding the acceleration of vector indexing using GPUs, a feature that Qdrant and other engines are increasingly adopting to speed up the ingestion process.

Setting Up an Optimized Collection

Let’s look at how to initialize a Qdrant collection with optimization in mind. In this example, we will configure the HNSW parameters to balance search speed and precision—a critical step often overlooked in basic tutorials.

from qdrant_client import QdrantClient

from qdrant_client.http import models

# Initialize the client (assuming a local Docker instance or cloud endpoint)

client = QdrantClient(host="localhost", port=6333)

# Define the collection name

collection_name = "production_knowledge_base"

# Create a collection with optimized HNSW settings

# m: Number of edges per node in the graph. Higher = more memory, faster search.

# ef_construct: Size of the dynamic list during index construction. Higher = better index quality, slower build.

client.recreate_collection(

collection_name=collection_name,

vectors_config=models.VectorParams(

size=1536, # Standard OpenAI embedding size

distance=models.Distance.COSINE

),

optimizers_config=models.OptimizersConfigDiff(

default_segment_number=2, # Optimizing for multi-threading

memmap_threshold=20000 # Threshold to move vectors to disk (mmap)

),

hnsw_config=models.HnswConfigDiff(

m=16,

ef_construct=100,

full_scan_threshold=10000

)

)

print(f"Collection '{collection_name}' created successfully with optimized settings.")In the code above, the memmap_threshold is a crucial parameter for cost optimization. It allows Qdrant to offload vectors to disk once memory limits are reached, utilizing the OS page cache. This feature makes Qdrant highly compatible with cloud instances that offer massive NVMe storage but limited RAM, a common scenario in cost-effective AI deployments.

Section 2: Implementing the RAG Pipeline

The integration of vector databases into application workflows is a frequent topic in LangChain News and LlamaIndex News. A robust RAG pipeline involves three stages: ingestion (embedding), storage (indexing), and retrieval (search). The choice of embedding model significantly impacts the quality of your search results.

Embedding Strategies

Developers currently have a plethora of choices. You might rely on APIs from providers highlighted in Cohere News or Amazon Bedrock News, or you might run open-source models locally. Running models like BERT or RoBERTa directly on the same cloud infrastructure as your vector database can significantly reduce network latency.

Below is an implementation using Sentence Transformers News libraries (often discussed alongside Hugging Face News) to generate embeddings and upsert them into Qdrant. This approach gives you full control over the data privacy and processing pipeline.

from sentence_transformers import SentenceTransformer

from qdrant_client import QdrantClient

from qdrant_client.http import models

import uuid

# Load a high-performance local model

# 'all-MiniLM-L6-v2' is fast and effective for many use cases

encoder = SentenceTransformer('all-MiniLM-L6-v2')

client = QdrantClient(host="localhost", port=6333)

collection_name = "production_knowledge_base"

# Sample dataset simulating a technical documentation knowledge base

documents = [

{"text": "Qdrant is a vector database written in Rust.", "category": "database"},

{"text": "Kubernetes manages containerized applications.", "category": "infrastructure"},

{"text": "Neural networks require significant GPU compute.", "category": "ml_theory"},

{"text": "Vultr provides high-performance cloud compute instances.", "category": "cloud_provider"}

]

points = []

# Batch processing for efficiency

for doc in documents:

# Generate vector embedding

vector = encoder.encode(doc["text"]).tolist()

# Create a point with payload

point = models.PointStruct(

id=str(uuid.uuid4()),

vector=vector,

payload=doc

)

points.append(point)

# Upsert points into Qdrant

operation_info = client.upsert(

collection_name=collection_name,

wait=True,

points=points

)

print(f"Upsert status: {operation_info.status}")This script demonstrates the “extract-transform-load” (ETL) process for vector search. By attaching a payload (metadata) to the vectors, we enable hybrid search capabilities—filtering by category while searching by semantic meaning. This is a standard pattern seen in Haystack News and DeepSpeed News discussions regarding efficient data retrieval.

Section 3: Advanced Techniques: Quantization and Hybrid Search

As your dataset grows from thousands to millions or billions of vectors, hardware costs can skyrocket. This is where advanced optimization techniques come into play. Qdrant News frequently highlights their quantization capabilities, which allow for significant memory reduction with minimal loss in accuracy.

Binary and Scalar Quantization

Quantization compresses high-dimensional vectors. Scalar Quantization (int8) reduces vector size by 4x, while Binary Quantization can reduce it by up to 32x. This allows you to fit massive datasets into the RAM of standard cloud instances, democratizing access to large-scale search.

Furthermore, combining keyword search (BM25) with vector search (Dense Retrieval) is becoming the gold standard. This approach, often discussed in Elasticsearch contexts but now fully supported by Qdrant, ensures that exact matches (like part numbers or specific error codes) are not lost in the semantic abstraction.

Here is how to implement search with quantization and payload filtering, ensuring high performance even on constrained infrastructure:

# Enabling quantization on an existing collection

client.update_collection(

collection_name=collection_name,

quantization_config=models.ScalarQuantization(

scalar=models.ScalarQuantizationConfig(

type=models.ScalarType.INT8,

quantile=0.99,

always_ram=True

)

)

)

# Performing a filtered search

query_text = "How to deploy databases on cloud?"

query_vector = encoder.encode(query_text).tolist()

# Define a filter to only search within specific categories

search_filter = models.Filter(

must=[

models.FieldCondition(

key="category",

match=models.MatchValue(value="database")

)

]

)

search_result = client.search(

collection_name=collection_name,

query_vector=query_vector,

query_filter=search_filter,

limit=3,

search_params=models.SearchParams(

quantization=models.QuantizationSearchParams(

ignore=False,

rescore=True # Rescore top-k results with full precision vectors

)

)

)

for hit in search_result:

print(f"Score: {hit.score:.4f}, Text: {hit.payload['text']}")The rescore=True parameter is a vital technique. It performs the initial broad search using the compressed (quantized) vectors for speed, then fetches the full-precision vectors for the top candidates to recalculate the exact score. This technique aligns with optimization strategies found in TensorRT News and ONNX News, where precision and speed are constantly balanced.

Section 4: Best Practices, MLOps, and the Ecosystem

Deploying Qdrant is not just about the code; it’s about the ecosystem. Integrating your vector database into a broader MLOps pipeline ensures reliability and scalability. Following MLflow News, Weights & Biases News, and ClearML News, we see a trend towards full observability of the RAG pipeline.

Monitoring and Observability

You must monitor the latency of your embeddings (inference speed) and the latency of your vector retrieval (database speed). If you are using OpenVINO News optimized models on Intel CPUs or leveraging Triton Inference Server News for GPU serving, these metrics should be correlated with Qdrant’s telemetry.

Choosing the Right Cloud Infrastructure

The synergy between software and hardware cannot be overstated. When reading AWS SageMaker News, Azure Machine Learning News, or Google Colab News, pay attention to the underlying compute types. For Qdrant:

- Memory: Prioritize RAM. HNSW graphs are memory-hungry.

- Storage: Use NVMe SSDs. If using memory mapping, disk speed directly correlates to search speed when RAM is full.

- Network: Low latency is key for distributed setups (sharding).

Integration with Modern Frameworks

The AI stack is becoming standardized. FastAPI News shows it is the de facto standard for serving AI apps. Streamlit News and Gradio News are dominating the UI prototyping space. Ensuring your Qdrant instance connects seamlessly with these tools is essential.

Here is a snippet demonstrating a production-ready API endpoint using FastAPI that interfaces with Qdrant, a pattern used by teams leveraging LlamaFactory News and Ollama News for local LLM serving:

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from typing import List

app = FastAPI()

class SearchRequest(BaseModel):

query: str

top_k: int = 5

@app.post("/search")

async def search_endpoint(request: SearchRequest):

try:

# Generate embedding (simulated function)

vector = encoder.encode(request.query).tolist()

results = client.search(

collection_name="production_knowledge_base",

query_vector=vector,

limit=request.top_k

)

return {"results": [hit.payload for hit in results]}

except Exception as e:

# In a real app, log this error to Sentry or Datadog

raise HTTPException(status_code=500, detail=str(e))

# This setup is compatible with deployment on Vultr, AWS, or RunPod News infrastructures.Conclusion

The intersection of advanced vector databases like Qdrant and high-performance cloud infrastructure is defining the next generation of AI applications. As we track Qdrant News, it becomes clear that the focus is shifting from simple vector storage to complex, optimized retrieval engines capable of handling multi-modal data at scale.

Whether you are following Meta AI News for the latest Llama models, or Stability AI News for image generation, the need for a persistent, semantic memory layer remains constant. By leveraging techniques like quantization, HNSW optimization, and proper cloud architecture, developers can build RAG systems that are not only accurate but also cost-effective and lightning-fast.

As tools like LangSmith News and DataRobot News continue to evolve, the integration between the orchestration layer and the database layer will tighten. For engineers, the takeaway is clear: master the vector database, optimize the underlying infrastructure, and you will hold the key to unlocking the full potential of Generative AI.