vLLM News: Mastering Enterprise-Grade GenAI Inference for Hybrid Cloud Architectures

Introduction

The landscape of Generative AI is shifting rapidly from experimental notebooks to robust, production-grade deployments. As organizations move beyond the initial hype cycle, the focus has squarely landed on inference efficiency, latency reduction, and cost optimization. In the realm of vLLM News, the narrative is evolving from simple model serving to complex, containerized solutions designed for the hybrid cloud. The challenge is no longer just about running a Large Language Model (LLM); it is about running it at scale, securely, and efficiently across diverse infrastructure environments.

Recent developments in the open-source community have highlighted the critical role of high-performance inference engines. While training frameworks dominate TensorFlow News and PyTorch News, the inference layer is where business value is realized. Tools like vLLM have emerged as the standard for high-throughput serving, utilizing innovative memory management techniques to outperform traditional methods. This shift is particularly vital for enterprises leveraging platforms like Red Hat or AWS SageMaker News, where resource utilization directly correlates with operational expenditure.

In this comprehensive guide, we will explore how vLLM is revolutionizing GenAI practicality. We will delve into the architecture of PagedAttention, demonstrate how to deploy OpenAI-compatible servers, and discuss advanced strategies for hybrid cloud integration. Whether you are following NVIDIA AI News for hardware updates or Hugging Face News for the latest models, understanding vLLM is now a prerequisite for modern AI engineering.

Section 1: The Architecture of Efficiency – Core Concepts

To understand why vLLM has become a headline topic in Machine Learning News, one must understand the bottleneck it solves: Key-Value (KV) cache memory fragmentation. In traditional transformers, memory for the KV cache is allocated in contiguous chunks. However, because the output length of an LLM is unknown prior to generation, frameworks often over-allocate memory, leading to significant waste and lower batch sizes.

PagedAttention: The Game Changer

vLLM introduces PagedAttention, an algorithm inspired by virtual memory paging in operating systems. Instead of contiguous memory, PagedAttention allows the KV cache to be stored in non-contiguous memory blocks. This seemingly simple architectural change allows the inference engine to batch significantly more requests together, increasing throughput by 2x to 4x compared to standard Hugging Face Transformers News implementations.

This efficiency is crucial when deploying models from Mistral AI News or Meta AI News (like Llama 3) in containerized environments. By maximizing GPU utilization, organizations can serve more users with fewer hardware resources, a critical factor for Azure AI News and Google Cloud Vertex AI News deployments.

Basic Offline Inference Implementation

Let’s look at how to implement a basic offline inference pipeline using vLLM. This approach is ideal for batch processing tasks, such as data augmentation or offline summarization, often discussed in LangChain News and LlamaIndex News tutorials.

from vllm import LLM, SamplingParams

# Initialize the LLM with a specific model from Hugging Face

# We use a quantization method (AWQ) here for memory efficiency,

# a common topic in recent PyTorch News.

llm = LLM(

model="TheBloke/Mistral-7B-Instruct-v0.2-AWQ",

quantization="awq",

dtype="float16"

)

# Define sampling parameters

# temperature controls randomness, top_p controls diversity

sampling_params = SamplingParams(temperature=0.7, top_p=0.95, max_tokens=100)

# Prepare a list of prompts

prompts = [

"Explain the concept of PagedAttention in simple terms.",

"Write a Python function to calculate the Fibonacci sequence.",

"What are the benefits of hybrid cloud for AI?"

]

# Generate outputs

outputs = llm.generate(prompts, sampling_params)

# Process and print the results

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}\nGenerated text: {generated_text!r}\n{'-'*50}")In the code above, the LLM class handles the model loading and memory management automatically. The engine manages the KV cache using PagedAttention behind the scenes, ensuring that even if we send hundreds of prompts, the GPU memory is utilized optimally. This level of abstraction allows data scientists to focus on the application logic rather than low-level CUDA memory management, a philosophy shared by Fast.ai News and Keras News.

Section 2: Building a Production-Ready Inference Server

While offline inference is useful, the real power of vLLM lies in its ability to act as a high-performance backend for real-time applications. In the context of vLLM News, the ability to spin up an OpenAI-compatible API server is a standout feature. This allows developers to swap out paid APIs from OpenAI News or Anthropic News with self-hosted open-source models without changing their client-side code.

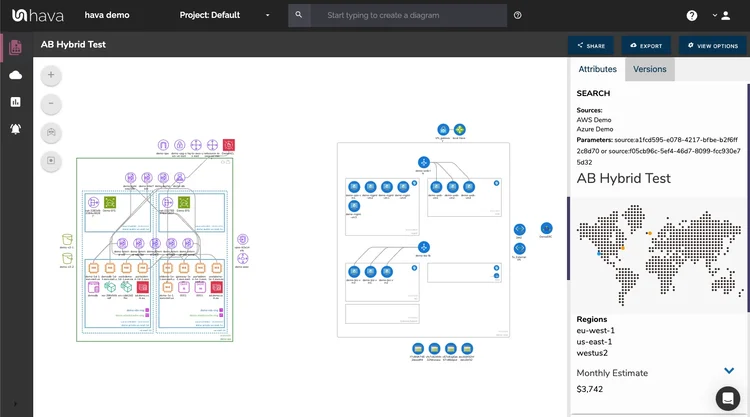

Containerization and Hybrid Cloud

For hybrid cloud deployments—spanning on-premise data centers and public clouds like IBM Watson News or Oracle Cloud—containerization is key. Wrapping vLLM in a Docker container ensures consistency. This aligns with the “write once, run anywhere” philosophy essential for modern MLOps, often highlighted in MLflow News and ClearML News.

When deploying vLLM as a server, it supports continuous batching. Unlike static batching, continuous batching allows new requests to be processed immediately as soon as previous requests finish, rather than waiting for the entire batch to complete. This drastically reduces latency for the end-user.

Client-Side Integration

Once your vLLM server is running (usually via a command like python -m vllm.entrypoints.openai.api_server), you can interact with it using standard libraries. Below is an example of how to build a client application that connects to a self-hosted vLLM instance. This setup is compatible with LangSmith News for tracing and evaluation.

from openai import OpenAI

import time

# Configure the client to point to your self-hosted vLLM server

# This could be running on a local GPU, a RunPod instance, or an AWS EC2 node.

client = OpenAI(

base_url="http://localhost:8000/v1",

api_key="EMPTY" # vLLM server usually doesn't require a key by default

)

def stream_response(prompt):

"""

Demonstrates streaming response, essential for good UX in Chatbots

(common in Streamlit News and Chainlit News).

"""

try:

start_time = time.time()

stream = client.chat.completions.create(

model="TheBloke/Mistral-7B-Instruct-v0.2-AWQ",

messages=[{"role": "user", "content": prompt}],

stream=True,

temperature=0.1

)

print(f"Response to: '{prompt}'")

collected_messages = []

for chunk in stream:

if chunk.choices[0].delta.content is not None:

content = chunk.choices[0].delta.content

print(content, end="", flush=True)

collected_messages.append(content)

print(f"\n\n[Time taken: {time.time() - start_time:.2f}s]")

except Exception as e:

print(f"Error communicating with inference server: {e}")

if __name__ == "__main__":

stream_response("Design a microservices architecture for an AI application.")This code demonstrates the ease of migration. If you have built an application using LangChain or AutoML News tools that rely on OpenAI, switching to a private, secure vLLM backend is as simple as changing the base_url.

Section 3: Advanced Techniques – Scaling and Optimization

As we delve deeper into vLLM News, we encounter scenarios requiring massive scale. Enterprise models are growing larger; deploying a 70B parameter model or a Mixture-of-Experts (MoE) model like Mixtral requires advanced strategies. This is where concepts from Ray News and DeepSpeed News intersect with vLLM.

Tensor Parallelism and Distributed Inference

A single GPU often lacks the VRAM to hold large models. vLLM supports Tensor Parallelism, allowing a model’s weights to be split across multiple GPUs. This is distinct from the data parallelism often discussed in Apache Spark MLlib News or Dask News. In Tensor Parallelism, parts of a single layer are computed simultaneously on different devices.

Furthermore, for hybrid cloud scenarios where data sovereignty is paramount (a key topic in DataRobot News and Snowflake Cortex News), optimizing throughput via quantization is essential. vLLM supports AWQ, GPTQ, and SqueezeLLM quantization methods out of the box.

Advanced Configuration Code

The following example demonstrates how to configure vLLM for a multi-GPU environment using Ray for distributed execution, ensuring high availability and fault tolerance.

from vllm import LLM, SamplingParams

import torch

# Check for GPU availability

gpu_count = torch.cuda.device_count()

print(f"Detected {gpu_count} GPUs. Configuring Tensor Parallelism...")

# Configuration for a large model (e.g., Llama-3-70B)

# tensor_parallel_size should match the number of available GPUs

# gpu_memory_utilization reserves memory for the KV cache

llm = LLM(

model="meta-llama/Meta-Llama-3-70B-Instruct",

tensor_parallel_size=gpu_count,

gpu_memory_utilization=0.90,

max_model_len=4096,

dtype="bfloat16", # Recommended for newer GPUs (Ampere/Hopper)

enforce_eager=False

)

# Advanced sampling with penalties to reduce repetition

# Relevant for creative writing tasks often seen in Stability AI News

sampling_params = SamplingParams(

temperature=0.8,

top_p=0.9,

presence_penalty=1.1, # Penalize tokens that have already appeared

frequency_penalty=1.1

)

prompts = [

"Analyze the geopolitical implications of AI regulation.",

"Draft a press release for a new hybrid cloud product."

]

outputs = llm.generate(prompts, sampling_params)

for output in outputs:

print(f"Generated {len(output.outputs[0].token_ids)} tokens.")

print(output.outputs[0].text[:200] + "...") # Print snippetThis snippet highlights the intersection of hardware and software. By leveraging Ray under the hood, vLLM manages the communication between GPUs efficiently. This setup is typical in high-performance clusters found in RunPod News, Modal News, or private enterprise clusters.

Section 4: Best Practices, Observability, and Integration

Deploying the model is only half the battle. Maintaining it requires robust observability and integration with the broader MLOps ecosystem. vLLM News frequently emphasizes the importance of metrics for production stability.

Monitoring and Metrics

vLLM exposes a Prometheus metrics endpoint. In a hybrid cloud environment, scraping these metrics is vital for auto-scaling. You should monitor gpu_cache_usage_perc (to prevent OOM errors) and num_requests_running. Integrating these with Weights & Biases News or Comet ML News dashboards provides visibility into model performance over time.

Vector Database Integration

For RAG (Retrieval-Augmented Generation) applications, vLLM servers are often paired with vector databases. Whether you are following Pinecone News, Milvus News, Weaviate News, Chroma News, or Qdrant News, the pattern remains the same: retrieve context, prepend to the prompt, and send to vLLM.

Custom API Wrapper with FastAPI

Sometimes the default OpenAI server isn’t enough. You might need custom logging, authentication, or preprocessing. Here is how you can wrap vLLM in a custom FastAPI News application, adding a layer of control.

from fastapi import FastAPI, HTTPException

from pydantic import BaseModel

from vllm import LLM, SamplingParams

import uvicorn

app = FastAPI(title="Enterprise GenAI Gateway")

# Initialize vLLM globally

# In production, use an AsyncEngine for better concurrency

llm_engine = LLM(model="mistralai/Mistral-7B-Instruct-v0.1")

class InferenceRequest(BaseModel):

prompt: str

max_tokens: int = 100

temperature: float = 0.7

@app.post("/generate")

async def generate_text(request: InferenceRequest):

"""

Custom endpoint wrapping vLLM generation.

Allows for custom validation, logging to Datadog/Splunk,

or rate limiting logic.

"""

if len(request.prompt) > 10000:

raise HTTPException(status_code=400, detail="Prompt too long")

sampling_params = SamplingParams(

max_tokens=request.max_tokens,

temperature=request.temperature

)

# Note: In a real async FastAPI app, use vLLM's AsyncLLMEngine

# This is a simplified synchronous example

outputs = llm_engine.generate([request.prompt], sampling_params)

return {

"text": outputs[0].outputs[0].text,

"usage": {

"prompt_tokens": len(outputs[0].prompt_token_ids),

"completion_tokens": len(outputs[0].outputs[0].token_ids)

}

}

if __name__ == "__main__":

# Run with: python server.py

uvicorn.run(app, host="0.0.0.0", port=8080)This architectural pattern is highly relevant for teams using Streamlit News or Gradio News to build internal tools. It allows for the injection of business logic before the prompt ever hits the GPU.

Conclusion

The evolution of vLLM News signifies a maturity in the Generative AI ecosystem. We are moving away from monolithic, slow inference towards agile, containerized, and highly efficient serving layers. Tools like vLLM, when combined with enterprise-grade platforms (such as those from Red Hat or Azure Machine Learning News), make GenAI practical for hybrid cloud environments.

By leveraging PagedAttention, Tensor Parallelism, and standard API interfaces, developers can build systems that are not only powerful but also cost-effective. Whether you are integrating with Google DeepMind News models, orchestrating with LangChain, or monitoring with Arize AI, the inference engine remains the beating heart of the application.

As we look forward, expect further integration between vLLM and compilation frameworks like ONNX News, OpenVINO News, and TensorRT News. The future of AI is not just about the smartest model, but the one that can be served the fastest and most efficiently. Start experimenting with vLLM today to future-proof your AI infrastructure.